Insights from an AI Scientist on Enhancing Safety in Autonomous Systems

Approved for public release: This paper described in this article is based upon work supported by the Department of Defense (DoD) through the National Defense Science & Engineering Graduate (NDSEG) Fellowship Program, the Air Force Research Laboratory Innovation Pipeline Fund, and the Autonomy Technology Research (ATR) Center administered by Wright State University. The views expressed are those of the authors and do not reflect the official guidance or position of the United States Government, the DoD, or the United States Air Force. This paper titled "Ablation Study of How Run Time Assurance Impacts the Training and Performance of Reinforcement Learning Agents" was approved for public release: distribution unlimited. Case Number AFRL-2022-0550.

We’re delving into a Q&A with Dr. Nate Hamilton, a Parallax Advanced Research AI scientist specializing in Safe Reinforcement Learning (SafeRL), on the fascinating world of AI and robotics. Dr. Hamilton joined Parallax’s Intelligent Systems Division in August 2022 upon graduating with his Ph.D. in Electrical Engineering from Vanderbilt University. While at university, Dr. Hamilton conducted his doctoral research on "Safe and Robust Reinforcement Learning for Autonomous Cyber-Physical Systems" and was a research assistant at the Verification and Validation of Intelligent and Trustworthy Autonomy Laboratory (VeriVITAL). He is also a National Defense Science and Engineering Graduate Fellow.

Caption: Dr. Nate Hamilton, a Parallax Advanced Research AI scientist

BACKGROUND: EXPLORING THE ROOTS OF INTEREST

Dr. Hamilton’s passion lies in SafeRL, which involves infusing safety considerations into the learning process to ensure high-performing AI solutions also prioritize safety. This approach finds applications in various control scenarios, such as spacecraft docking or autonomous driving. Dr. Hamilton’s journey began with a project involving quadrotors and their inconsistent flight behavior, which he conducted in graduate school. This spurred him to explore how safety could be incorporated into the learning process to enhance performance and reliability.

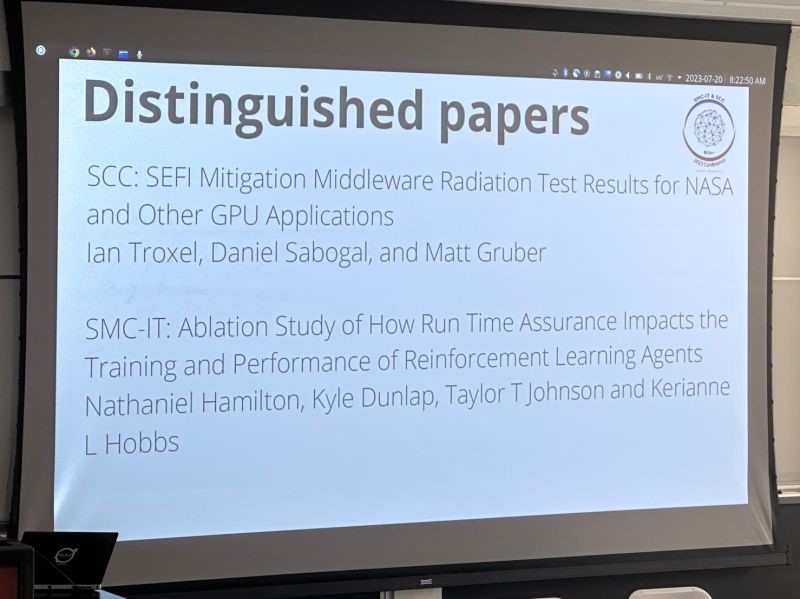

In July 2023, Dr. Hamilton and his colleagues, Kyle Dunlap, Taylor T Johnson, and Kerianne L Hobbs, celebrated the publication of the research “Ablation Study of How Run Time Assurance Impacts the Training and Performance of Reinforcement Learning Agents” in the Institute of Electrical and Electronics Engineers (IEEE) Xplore issue. IEEE is the world’s largest technical professional organization dedicated to advancing technology for the benefit of humanity. The paper was presented at the 2023 IEEE 9th International Conference on Space Mission Challenges for Information Technology on 18-27 July 2023 at the California Institute of Technology in Pasadena, CA. Dr. Hamilton also won a distinguished paper for the IEEE SMC-IT conference (for best paper).

Caption: Dr. Nate Hamilton presenting “Ablation Study of How Run Time Assurance Impacts the Training and Performance of Reinforcement Learning Agents” at the 2023 IEEE 9th International Conference on Space Mission Challenges for Information Technology on 18-27 July 2023 at California Institute of Technology in Pasadena, CA.

The study explores the increasing significance of Reinforcement Learning (RL)* in machine learning and the safety concerns that persist during the training of RL agents. To address this, SafeRL emerged with procedures and algorithms that ensure agents respect safety constraints during the learning and/or deployment process and investigate the influence of run time assurance (RTA)*. Dr. Hamilton and his team’s study sought to uncover the dynamics of SafeRL by conducting ablation studies. These studies, inspired by neurology experiments, involved removing or altering components within the RL process to identify their impact on learning and performance. The experiments addressed whether agents become overly reliant on safety systems and which safety mechanisms are most effective. The team assessed various RTA approaches within on-policy and off-policy RL algorithms to ascertain their efficacy, agent reliance on RTA, and the interplay between reward shaping and safe exploration in training. Their findings shed light on the trajectory of SafeRL, while their evaluation methodology enhances future SafeRL comparisons. Learn more about the research here.

*Key terms:

Reinforcement Learning (RL) is a branch of machine learning that focuses on training optimal behavior through trial and error. An agent takes actions in an environment, receives rewards based on the quality of those actions, and uses this feedback to improve its future decision-making.

Run Time Assurance (RTA) is an online monitoring technique used to ensure the safety of an agent's behavior during its operation. It involves hooking up a high-performing but potentially unpredictable primary control (in this case, the RL-trained agent) with a backup control. Depending on the specific RTA approach, it either switches between these controls when unsafe situations are detected or makes minimal adjustments at each time step to guide the agent away from hazardous scenarios.

RESEARCH PROCESS AND CHALLENGES

Q: What were the primary objectives of your study, and how does it fit into the broader scientific context?

Dr. Hamilton: In our recent study, we delved into SafeRL methods from existing literature. Our approach systematically analyzed these methods, dissecting their variations through an ablation study involving removing components to understand their impact. Our experiments aimed to address specific questions, yielding insights into the complex interaction between SafeRL methods and the broader scientific context.

Our five key questions detailed in the paper include:

- Do agents become overly reliant on safety systems like RTA?

- Which RTA configurations most affect and facilitate learning?

- Which RTA strategies are the most conducive to learning?

- How do various RL algorithms interact with these safety mechanisms?

- Is crafting a precise reward function or using safety mechanisms more crucial for learning?

Q: Walk us through the methodology and experimental design of your study.

Dr. Hamilton: Our methodology is inspired by "ablation studies," originally developed in the 1990s and used for studying rat brains. Instead of manipulating brains, we altered RL components to observe their impact on learning and assessing effects on training and performance. We analyze changes in sample complexity and final performance and explore instances where training involves RTA*, but evaluation doesn't, revealing potential dependence formation.

Conducting these experiments was challenging. We trained 880 RL agents, demanding coordination and significant time despite parallel processing. The major challenge was organization due to the agent count. Training took over a week.

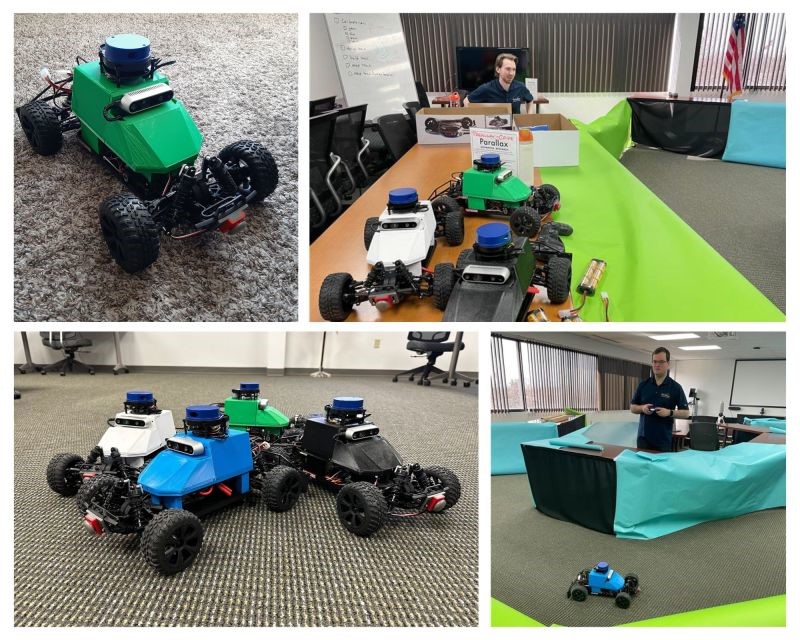

Caption: Dr. Hamilton and Kyle Dunlap of the Parallax Intelligent Systems Division team test autonomous 1/10th scale cars inspired by the MuSHR platform on a racetrack.

KEY DISCOVERIES AND IMPLICATIONS

Q: Could you summarize the most significant discoveries from your study and their real-world implications?

Dr. Hamilton: Our study uncovered several significant findings with notable real-world implications across industries for the development of autonomous systems, autonomous vehicles, and the implementation of current infrastructure initiatives. In autonomous driving, for example, human drivers serve as run-time assurance, intervening when the vehicle's behavior seems unsafe. Similarly, our research emphasizes the need for human intervention to ensure safety in AI systems. Even with such intervention, our study highlights the risk of system dependence. Removing this intervention could disrupt the system's intended functioning. This prompts deeper questions about AI's autonomy and the importance of human intuition in complex scenarios. Applied to autonomous vehicles, our findings stress the necessity of human backup for safety.

- Training with RTA carries the inherent risk of fostering agent dependence on RTA.

- The Explicit Simplex RTA approach emerged as the most effective method for training safe agents efficiently.

- Integrating cost components linked to safety violations or RTA usage was identified as a strategy to diminish agent dependence and cultivate safer behaviors.

- On-policy RL algorithms like Proximal Policy Optimization (PPO) outperformed off-policy algorithms like Soft Actor-Critic (SAC) when used with RTA.

- Crafting a well-defined reward function proved crucial for effectively teaching desired behaviors. This importance surpassed the impact of guiding the agent through RTA.

Q: How do these discoveries challenge or expand existing theories in the field?

Dr. Hamilton: The outcomes of our study bring to light fresh inquiries regarding the exploration of safety in the future. We now face questions about acceptable constraints that might occasionally need to be transgressed—such as momentarily exceeding speed to merge safely on a highway and avoid collisions. The challenge lies in distinguishing these exceptional scenarios for an agent learning to operate consistently, safely, and reliably. These discoveries challenge our understanding of balancing safety with practicality in the evolving landscape of autonomous systems.

Q: How might your findings benefit society or contribute to technological advancements in the future?

Dr. Hamilton: Our findings carry significant potential benefits for society and the advancement of technology. One key insight is the potential risk of agent dependence on RTA, prompting caution against deploying systems without rigorous testing or including RTA.

In our study, we focused on spacecraft docking* as our target platform. This is a crucial consideration, especially for space operations involving missions that require autonomous systems. The findings hold importance for scenarios where expensive spacecraft need reliable backup mechanisms. The aspiration is to streamline operations by creating autonomous systems capable of managing backups and contingency plans, ultimately leading to increased mission efficiency and a faster turnaround. This knowledge could revolutionize space missions, allowing for more autonomy and reducing the need for extensive human planning for potential failures.

*Key term: Space docking platforms refer to a common challenge in spacecraft operations. It involves docking maneuvers between a large "chief" spacecraft and a smaller "deputy" spacecraft.

COLLABORATION AND FUTURE DIRECTIONS

Photo by charlesdeluvio on Unsplash

Q: How did collaboration shape your research, what were the contributions of your team members and collaborators, and how do you envision collaboration influencing the future of scientific exploration in this field?

Dr. Hamilton: Collaboration played a pivotal role in shaping our research and will continue to drive scientific exploration, ensuring comprehensive insights. In terms of the contributions of my team members and collaborators:

- Kyle Dunlap designed and constructed the RTA approaches used in the study. His expertise in RTA is detailed in the paper "Comparing Run Time Assurance Approaches for Safe Spacecraft Docking."

- Taylor T Johnson, my advisor, provided consistent guidance and posed critical questions that refined our methods and experiments.

- Kerianne Hobbs played a key role in initiating the study, encouraging a thorough examination of related works, and shaping the study's trajectory.

Moving forward, we intend to broaden the research scope by exploring various problems, environments, and scenarios. This expansion aims to validate the conclusions drawn from our current study across different scenarios. We are also considering more variations to the problem, including additional types of RTA and configurations. Additionally, we're looking at the impact of adding sensors to the observation space, exploring whether more data enhances AI performance or leads to overload.

ENGAGING THE PUBLIC AND INSPIRING YOUNG SCIENTISTS

Image by Cheska Poon from Pixabay

Q: How do you aim to communicate your findings to the public and foster greater scientific literacy?

Dr. Hamilton: I'm passionate about making research more approachable and contributing to a scientifically informed public. I'm developing a user-friendly website to present experiments with clarity and build an understanding of complex findings. This includes simplifying terms and improving communication channels. While I'll continue publishing papers, I'm also focused on creating accessible ways to share results, starting with the website. My vision is that it will feature dedicated pages for each experiment, streamlining engagement compared to lengthy appendices.

Q: What challenges do you perceive in bridging the gap between complex scientific concepts and public understanding?

Dr. Hamilton: The primary challenge lies in terminology. In RL, the terminology is often inconsistently defined and used, creating communication hurdles within the community and making it even more challenging to convey to the public. For instance, terms like "rollout," "episode," and "run" are sometimes used interchangeably, while they represent distinct aspects of similar processes, like a rollout comprising episodes and runs signifying full training rounds. This issue resonates deeply with me. As scientists, we possess a wealth of data and conclusions, often confined to our own understanding. The challenge is to communicate this knowledge effectively.

Q: How do you envision inspiring and nurturing the curiosity of aspiring young scientists?

Dr. Hamilton: If we can make the education side of things more streamlined, the overflowing curiosity of young scientists can push us to answer questions we haven’t thought to ask yet. If there were a website that documents the experiments done and encourages contributions, that could be a great way to crowdsource discoveries.

###

About Parallax Advanced Research and the Ohio Aerospace Institute:

Parallax Advanced Research and the Ohio Aerospace Institute form a collaborative affiliation to drive innovation and technological advancements in Ohio and for the Nation. Parallax, a 501(c)(3) private nonprofit research institute, tackles global challenges through strategic partnerships with government, industry, and academia. It accelerates innovation, addresses critical global issues, and develops groundbreaking ideas with its partners. With offices in Ohio and Virginia, Parallax aims to deliver new solutions and speed them to market. The Ohio Aerospace Institute, based in Cleveland, Ohio, fosters collaborations between universities, aerospace industries, and government organizations and manages aerospace research, education, and workforce development projects. OAI is pivotal in advancing the aerospace industry in Ohio and the Nation. More information about both organizations can be found at the Parallax and OAI websites.