Golden Dome will lean hard on AI for track correlation, shot recommendation, and interceptor allocation. If that AI isn’t proven and explainable, commanders face hesitation at the worst possible moment.

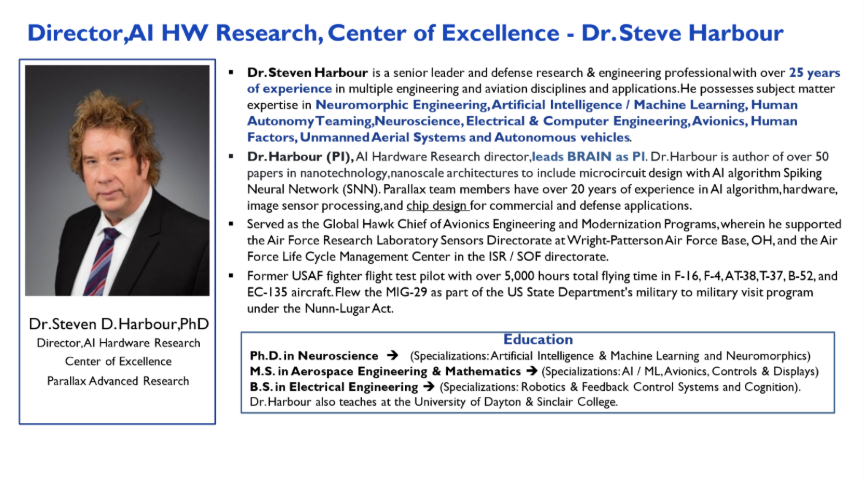

As Dr. Steven Harbour, Director, AI Hardware Research, said, “If operators see inconsistent or unexplained AI decisions, they hesitate—and you can’t afford hesitation in a raid.”

What’s at risk without assurance

Missed detections & false alarms: Unassured models can confuse decoys for warheads, lose custody on fast movers, or over-trigger on clutter—wasting scarce interceptors and creating coverage gaps.

Adversarial exploitation: Systems left un-hardened are vulnerable to spoofing, jamming, cyber/EW manipulation, or even adversarial AI inputs designed to mislead models.

Cascade failures: Errors in correlation ripple into bad interceptor allocation and poor battle damage assessment, undermining the whole kill web.

Red-teaming defined—and why it matters

“Red-teaming is offense against your own system before the adversary does,” said Harbour.

We employ “AI that attacks AI” to stress models under decoys, jamming, clutter, and edge cases—well beyond their training data. The result: operators and commanders gain evidence-based confidence that the system performs in the fog of war, not just in the lab.

How Parallax/OAI differentiates in AI assurance

Neuromorphic/adversarial testing: Use bio-inspired, spiking neural networks to probe weaknesses in conventional models (e.g., spike-based deception), revealing failure modes primes often miss.

Cognitive emulation of an adaptive enemy: Build adversarial agents that behave like thinking opponents, not static scripts, to surface brittle model behavior early.

Cross-domain fusion assurance: Validate not just single models but the fusion layer where radar, EO/IR, electronic warfare, and passive RF come together—exactly where correlation errors often emerge.

Embedded self-monitoring: Lightweight “assurance sentinels” inside deployed AI flag abnormal states in real time and provide concise “why” explanations to operators, strengthening trust.

Assurance that evolves with the mission phase

Detect/Track: Emphasize robustness to noise/clutter and adversarial resistance to preserve accurate custody.

Allocate: Validate resource-optimization logic so finite shot opportunities aren’t misallocated under stress.

Engage: Require formal verification and validation, and human-understandable rationale (“human-on-the-loop”) before trigger-pull outcomes.

Maturation path: Start with high-stress simulation and LVC; progress to closed-loop live-fire trials, continuously feeding red-team insights back into model retraining and user interface design.

Bottom line: Trustworthy AI isn’t a checkbox; it’s a continuous discipline. By combining neuromorphic adversarial testing, fusion-layer validation, and operator-centric explainability, Parallax/OAI helps Golden Dome field AI that’s resilient, auditable, and battle-ready.

About Dr. Steve Harbour

###

About Parallax Advanced Research and the Ohio Aerospace Institute

Parallax Advanced Research is a research institute that tackles global challenges through strategic partnerships with government, industry, and academia. It accelerates innovation, addresses critical global issues, and develops groundbreaking ideas with its partners. With offices in Ohio and Virginia, Parallax aims to deliver new solutions and speed them to market. In 2023, Parallax and the Ohio Aerospace Institute formed a collaborative affiliation to drive innovation and technological advancements in Ohio and for the nation. The Ohio Aerospace Institute plays a pivotal role in advancing the aerospace industry in Ohio and the nation by fostering collaborations between universities, aerospace industries, and government organizations, and managing aerospace research, education, and workforce development projects.